Happy 2019! We’ve seen quite a few Modern developments since we last checked in and it’s an exciting place to be as we start the new year. This has included a series of Grand Prix Top 8s featuring numerous breakout strategies, none less influential than Arclight Phoenix decks which have swooped onto the tournament scene and scored significant finishes. Bant Spirits may be replacing Humans as the format’s premier disruptive aggro deck, Grixis Death’s Shadow has returned with a vengeance, and mainstays like BG Rock and UW Control have clung on with high profile finishes. Meanwhile, Krark-Clan Ironworks (KCI) continues to post outstanding performances, including a commanding 4 slots in the Grand Prix Oakland T8. All of this has led to extensive discussion. Online Modern communities are ablaze with conversation about format health, possible bans/unbans, the viability of various strategies, and an overall retrospective on 2018 Modern. Today, I want to contribute to this discussion with an overview of top deck overall match win percentages (MWPs) and matchup MWPs (MMWPs) from 2018. Plus a little 2019 data courtesy of GP Oakland.

One of the most pleasant surprises of late 2018 was a series of ChannelFireball articles by contributor Tobi Henke. Henke would be on my shortlist for Magic authors of 2018 with his data-driven analyses of Modern, Standard, and tournament scenes. This has returned quantitative data to the forefront of our conversation, and I am looking forward to more of Henke’s articles. I am particularly impressed with his two deep dives into GP Portland conversion rates and GP Portland MWPs; I strongly encourage everyone to read them. His newest entries have inspired me to continue updating the Modern MWP dataset I drew on in my last post.

The Data and Limitations

The MWP dataset has grown to include results from GP Prague, Vegas, Sao Paulo, Barcelona, Atlanta, and Portland. It also includes matches where decks were known via coverage/Twitter/articles/etc. from all StarCityGames Opens from May through August (6 in total). This results in a dataset with over 15k matches; a relatively robust sample of 2018 Modern results. Of course, as with all datasets, this one has limitations that must be considered when interpreting the MWP results. Some of the biggest are below:

- Most GP data was self-reported through online surveys where players submitted the deck they piloted. See my previous post or Henke’s articles for more on this method and the community members who deserve credit for it. This skews the sample towards enfranchised/knowledgeable players who are plugged into online communities where they see and value such a survey. These players may also be more skilled or experienced overall, and/or have better records that they are willing to share.

- A chunk of GP/SCG data comes from cross-referencing Twitter/articles/video coverage decks with results/standings/pairings. This could bias the sample towards better and/or higher profile/knowledge players, i.e. those who are more likely to be featured or report on their performance.

- Not all GP/SCG results from any given event are included; just the sample that we have decklists for. This will miss the broader tournament context and might skew results in favor of the most-played decks.

- SCG and GP results/data may not be compatible. Some community members and authors have argued that different types of players play at one event type versus the other. There have been debates about relative competitiveness which, to my knowledge, are unresolved. This might jeopardize our data’s validity as a measure of a deck’s “true” or “overall” MWP.

- MTGO data is not included. As many of you know, Wizards has dramatically cut our access to MTGO data, which makes it very difficult to include in this project. Wizards is likely to make their own MWP calculations primarily from their massive MTGO datasets, as we have seen in previous Standard banlist updates. MTGO and paper dynamics can be very different (e.g. different timing systems, different deck function regarding loops, etc.) and this analysis will miss the MTGO dimension.

- Events are taken from across 2018. On the one hand, this increases N and makes for potentially more reliable results. On the other hand, this might cross-pollinate our sample with incompatible time periods or even decks playing different cards. For instance, Phoenix wasn’t even a legal card for most of 2018. KCI was not using Sai. Dredge did not have Creeping Chill. UW Control did not have to battle Dredge with Creeping Chill. All of these cases and the countless others we can identify show potential issues with mixing time periods. Ultimately, I believed an increased N was worth those costs, but I’d be happy to discuss adjustments if that’s an issue for readers.

- Despite all this work, N is still smaller than I would like for most of these observations. It gets even worse when we look at the MMWPs later. I’ll try to account for this with confidence intervals and transparency in showing the sample N, but there’s no substitute for more observations. Unfortunately, we simply don’t have access to bigger samples and need to work with what we have.

I am sure there are other notable limitations that I have not mentioned here. As with all data-related articles, I encourage readers to not get too stuck on the limitations of a dataset. One of the common criticisms of statistics is that you can twist stats to describe anything. This can be true, but it is also true that we can wring out limitations to invalidate any dataset we look at. This often causes us to miss out on valuable information because we are too busy trying to pick it apart. If anyone has data questions or comments, I’d be happy to discuss them in the comments or another community platform.

Overall MWP Results

The list below gives overall MWP results for all decks with >300 observed matches in the dataset. This data only includes known opponents and excludes mirrors. It represents roughly 75% of all data we have, with N>300 being a cutoff that is about one standard deviation over the average number of matches for any given deck. I’ve ordered the list by MWP but provided Ns for all decks as well. Finally, I give the 95% confidence interval for all deck MWPs (i.e. the likely low- and high-end of the MWP estimate) assuming a normal distribution. As an example of reading the list, KCI averaged a 56.9% MWP over 693 matches, but the true MWP is 95% likely to fall somewhere between 53.2% and 60.5%. But there’s still a 5% chance it’s even lower or higher. For more on confidence intervals and working with MWP data, I highly recommend you read Karsten’s excellent “How Many Games…” article on ChannelFireball.

- KCI: 56.9% (N= 693, 53.2%-60.5%)

- Dredge: 55.1% (N= 314, 49.6%-60.6%)

- HS Affinity: 55% (N= 460, 50.5%-59.5%)

- Bant Spirits: 53.9% (N= 323, 48.4%-59.3%)

- Counters Company: 53.7% (N= 419, 48.9%-58.5%)

- Humans: 51.8% (N= 1520, 49.3%-54.3%)

- UW Control: 51.6% (N= 888, 48.3%-54.9%)

- Gx Tron: 51.5% (N= 1026, 48.4%-54.5%)

- Hollow One: 50.8% (N= 494, 46.4%-55.2%)

- Storm: 50.1% (N= 425, 45.4%-54.9%)

- Grixis Death’s Shadow: 50.1% (N= 485, 45.7%-54.6%)

- Infect: 49.6% (N= 510, 45.3%-53.9%)

- Burn: 49.6% (N= 1136, 46.7%-52.5%)

- Jeskai Control: 48.4% (N= 833, 45%-51.8%)

- Titanshift: 47% (N= 483, 42.5%-51.4%)

- Jund: 46.3% (N= 734, 42.7%-49.9%)

- Mardu Pyromancer: 46% (N= 678, 42.3%-49.8%)

- Affinity: 44% (N= 609, 40.1%-47.9%)

Some big takeaways according to this data:

- KCI remains the top performing deck by MWP in Modern. Incidentally, it is also the top-performing GP deck of 2018 by GP/PT T8s (14 T8s total vs. 11 for Gx Tron and 12 for Humans).

- Of the 11 decks with >50% MWP in this large N dataset, literally all of them play either Hierarch, Stirrings, Looting, or SV/Opt. These are Modern’s pillars: play them.

- The shift from Humans to Bant Spirits appears to be reflected in the MWP data, showing Bant pull ahead by a few percentage points with admittedly a smaller N.

- Hardened Scales Affinity has supplanted traditional Affinity by MWP but the GP results are a lot closer: 4 T8s for HS Affinity vs. 3 for traditional. That said, Affinity has not made T8 at a GP since 07/2018, whereas HS Affinity has secured 4 slots in that time. This further vindicates the shift.

- By MWP alone, UW Control appears to be plain better than Jeskai. They have similar Ns and similar reporting patterns in the data, but UW Control is way ahead on MWP. Indeed, UW Control’s low-end MWP confidence interval is basically the same as Jeskai’s average. GP T8s do not quite reflect this, with 9 T8s for UW Control and 8 for Jeskai.

Just for fun, here are the decks with 100<x<300 matches. I wouldn’t be as confident in these MWP for a variety of reasons, but it’s interesting data to have. One problem with this data is that N is starting to get smaller. This really amplifies the existing dataset limitations. Second, for the higher values, I believe they are heavily biased by what I believe are specialists reporting their own performance. It’s not that Merfolk or Eldrazi Tron (who the heck even plays E-Tron anymore?) actually have 55% MWP across all Modern games. It’s more likely that a few specialists play those decks well and sustain a 55% MWP with them. Keeping those and other possible limitations in mind, here’s the data:

- Eldrazi Tron: 55.2% (N= 116, 46.1%-64.2%)

- Merfolk: 55.2% (N= 116, 46.1%-64.2%)

- UR Phoenix: 53.9% (N= 43, 39.1%-68.6%)

- Death and Taxes: 52% (N= 196, 45%-59%)

- Bogles: 51.7% (N= 290, 46%-57.5%)

- Abzan: 51.7% (N= 120, 42.7%-60.6%)

- Bridgevine: 48.6% (N= 185, 41.4%-55.9%)

- Elves: 47% (N= 279, 41.1%-52.8%)

- Amulet Titan: 46.5% (N= 129, 37.9%-55.1%)

- Blue Moon: 45.4% (N= 152, 37.5%-53.3%)

- Ad Nauseam: 43% (N= 107, 33.6%-52.4%)

- Ponza: 36.4% (N= 151, 28.7%-44.1%)

Matchup Results

As many of you will note, overall MWP doesn’t tell the whole picture. Matchup MWPs (MMWPs) are in many regards more important, especially in a format as diverse as Modern. Imagine a hypothetical deck with MMWPs of 50/50, 40/60, and 60/40 against top decks. That would average to a 50/50 overall MWP. Now compare to another hypothetical deck with a spread of 50/50, 80/20, and 20/80. That’s still a 50/50 overall MWP, but with a huge and variable spread in individual matchups. Full MMWP results give us a sense of that variance and help you decide what decks to bring to tournaments based on expected metagames.

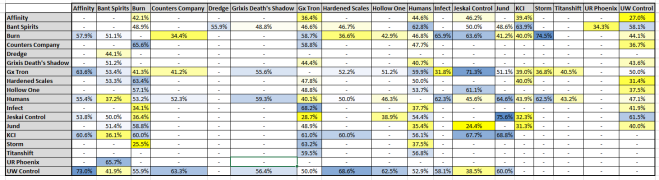

First, here’s the MMWP cross-tabulation for our top 18 decks (i.e. all decks with >300 observed matches). When reading the table, always read the deck on the left Y axis as having an N% MMWP against the deck on the top X axis. The other deck’s MMWP is always 100% minus that value. The number in parentheses is the matchup sample N. Here are two examples of reading the data:

- Humans beat Bant Spirits in 37.2% of their 43 matches. By contrast, Bant Spirits beat Humans in 62.8% of their 43 matches.

- UW Control beat Humans in 52.9% of their 85 matches. By contrast, Humans beat UW Control in 47.1% of their 85 matches.

With that in mind, here’s the data, both as a screenshot of the table and as a linked Google Sheets table:

2018 MMWPs for Top Modern Decks (all Ns)

Here’s the embedded Google Sheet:

As you can see, we have a few robust sample sizes here that should give us extreme confidence in the estimated MMWP. For instance, Gx Tron and Humans had 137 observed matches in the entire dataset, so the estimate of about a 60%-40% matchup in Gx Tron’s favor is probably quite accurate. Unfortunately, not all of our observed matches meet that standard. About half have fewer than 20 observed matches and almost 1 in 5 observed matches don’t even have 10 datapoints. I can hear the angry Internet comments about small Ns already.

One way to account for this is just removing everything that doesn’t meet a sample size threshold. The table below does just that, excluding any matchup that didn’t have an above average number of matches (30 in the sample). This one is color-coded to reflect better matchups (blue) to worse matchups (yellow):

2018 MMWPs for Top Modern Decks (N>30 matches)

And the Sheet:

A better way to account for a smaller N is to construct confidence intervals around the average observed MMWP. This is similar to our MWP confidence interval calculations above, but our samples get a lot smaller. Karsten linked to a great online calculator that automates these calculations using different methods. Unfortunately, this was a pain to automate in Excel on a cross-tabulation, so I’m using a wider and less exact interval estimate that I could actually code. If someone wants to go through the legwork of applying other interval-calculation methods to the data, please go ahead. For example, the Clopper Pearson Exact method is a great tool that Karsten recommends, but if you try to code that in Excel, you’re gonna have a bad time. The binomial distribution is a lot easier to work with, even if the intervals might be less accurate. The table below shows 95% intervals for all decks with 10+ observed matches.

2018 MMWP Confidence Intervals for Top Decks (N>10 matches)

Plus Sheet:

This interval table is overwhelming for me, let alone readers, and I struggled to figure out a better way to format it. You’ll read this similar to the normal MMWP matrix, but accounting for its representation of a spread. For example, there’s a 95% chance (our confidence interval) that Hollow One’s true MMWP versus Affinity is somewhere between 3% and 41.4%. That’s a pretty wide range because we only observed 18 matches in the sample. For larger N samples, you’ll still see a range: Humans vs. Grixis Death’s Shadow had 54 datapoints in the sample, and there’s a 95% chance that the true MMWP is somewhere between 46.2% and 72.4% in favor of Humans, or 27.6% and 53.8% in favor of GDS.

As a whole, these confidence intervals might seem overwhelming, needlessly wide, unhelpful, and/or overly technical. They become more helpful if you have your own expectations about the matchup and can triangulate them with these intervals. For instance, you might believe that Bant Spirits is favorable against KCI. Looking at our MMWP intervals, we see the matchup went 63.9% in Bant Spirits’ favor over 36 matchups. You also know that there’s a 95% chance that the true MMWP is somewhere 48.2% and 79.6%. Based on all three of those observations, you could reasonably conclude this was a “favored” match that is probably in the 55%-60% range in favor of Bant Spirits. You could apply similar logic to any matchup in the grids, shifting your MMWP expectations accordingly.

Overall MWP/MMWP Conclusions

I’m about as exhausted looking at numbers as you are (probably more by now). If you can’t stand to read another table, here are some big matchup takeaways for top decks and their relative performance against each other.

- Want to beat Gx Tron? Strong options with larger samples to back up their performance are Burn (58.7%), Infect (68.2%), KCI (61%), and Storm (63.2%). If you want to play a so-called “fair” deck and still have game against Tron, check out UW Control and its 50-50 matchup.

- Want to beat Bant Spirits? Look at UR Phoenix (65.7%). All other decks are either unfavorable for the opponent or so close to 50-50 that they might actually be even instead of slightly favored. This is probably one reason Bant Spirits has surpassed Humans; far more 50-50 matchups.

- Speaking of Humans, the tribe posts weak matchups against Bant Spirits (37.2%), Gx Tron (40.1%), Jeskai Control (45.6%), and Titanshift (43.2%). This is further evidence supporting a shift away from Humans towards Bant Spirits for large, unknown metagames.

- Bant Spirits had the most matchups in the 45%-55% range at 9 total, followed by Gx Tron and Humans at 8 each. Incidentally, all of those decks had only a single matchup that was sub-30% in the opponent’s favor. KCI had the least 45%-55% matches at just 1, followed by UR Phoenix and Storm at 2 each; these are polarizing strategies with most MMWPs outside of that 50-50 band.

- Gx Tron, Hardened Scales, KCI, and UW Control all had the most matchups that were >50% in their favor at 10 each. Bant Spirits could also be here, depending on where you place their MMWPs in a confidence interval. These are all solid choices with lots of even or better matchups.

- UW Control appears better than Jeskai Control. Jeskai has the edge in five matchups over UW Control (Bant Spirits, Humans, Jund, Storm, Titanshift), but UW Control is still positive in the Humans and Jund matchup. Both are still negative against Titanshift. If you expect lots of Bant Spirits and Storm, play Jeskai. Otherwise, play UW Control. Incidentally, Jeskai has the edge in the mirror, so factor that into your selection as well. As a final influencing factor, UW Control is dead even with Tron at 50-50 with 60 matches played. Poor Jeskai is in the 30-70 range with 87 matchups played. Yuck.

As usual, please moderate any of these takeaways with the associated sample sizes and all the other limitations I already mentioned. I’m excited to hear more conclusions from readers in the comments and on any online communities this data finds its way to.

Thanks for reading and for looking to the limited data we have to help make sense of the format. Wizards may not give us as much data as many of us might want, but there’s still a lot out there if you have the patience to dig through what we have. Data-driven analyses like these should always be taken alongside the qualitative and opinion pieces; it’s a mistake to assume that data/stats totally invalidate other content. Let me know if you have any questions, suggestions, criticisms, ideas, or other thoughts, and I look forward to another year of Modern.

P.S. Unban Stoneforge Mystic.

Great job considering we’re operating with imperfect data. Thanks for putting this together.

LikeLike

Absolutely fantastic job! Considering the amount of data, everything is prepared in a nice, compact and readeable form. Thaks for that!

LikeLike

Hey my friend, what’s up?

Very very cool, your analisis, so cool that I transposed everything to an excel and put together some graphics to help us get insight’s and I’m leaving it attached in link for Google Drive.

Link for download:

https://drive.google.com/file/d/1bGXV0W267LCYbVPgRbqxXSO0s-nDCT78/view?usp=sharing

Congrats.

LikeLike